Markdown

GitHub

CI/CD

Serverless + CDN

Cool.

mkiuchi’s diary book 2 - page added

Markdown

GitHub

CI/CD

Serverless + CDN

Cool.

Recording TV into HDD is common use today. In addition to tech-savvies, many non-professional people always uses hdd recording. In Japan, almost all TV programs provided with MPEG2-TS. With Full HD qualiy, its bandwidth is going to be 12-20 Mbps. It seats roughly 20GB disk space when you are going to save 2 hours movie or sports program. As a result, many people suffer from a lot of occupied space in home’s hdd space. Including me. Some broadcast company may deny, but it is definitely true that stored movie is the part of his memory. He wants to keep them as long as possible. Saving them to HDD or cloud: seems straight forward, but not works.

Most easy and straight forward solution is move your recordings into another bulk HDD or cloud storage. But as a result of a few years activity, data size of your recordings may grow 10-50 TB. Many people are going to offload their recordings into monthly fixed rate cloud storage, so customer who do not pay much consumes a large part of storage capacity. It greatly exceeds cloud vendor’s forecast. I’ve heard that some user ware going to save 70TB data into cloud storage. Today, almost all cloud vendor declines monthly fixed rate cloud backup solutions and offers only “pay as you go” plans. Storing your recordings into bulk hdd is more reasonable from viewpoint of cost. But your huge recordings wastes your time so much. When you are going to move some “old” recordings into bulk hdd, it consomes a lot of time (It sometimes takes several days). When you’ve completed to move your old recordings, you may start to think about how to keep your backup healthy and can be read everytime. You may take a few hdd up into RAID in order to avoid data lost in case of HDD failure. As a result, you have to run several hdd 24/365 in addition to main disks. These disks looks ugly, makes some noise in your home, and make your home some geeky(Your friends may put off from you !).

Movie compression is one of hot topic among tech domain. Recentry, they have released cutting-edge H.265 compression codec it archivess rouchly 90% compression rate compaired with MPEG2-TS. If you have 20TB movie archive, it gonna be 2TB ! Disadvantage about H.265 is extraordinary long compression time. When you’re going to compress 2h movie, it will take about 50-80 hours. Fortunately, almost all movie compression task can be separated and run in parallel and can takes your compression time shorter. So here comes a need for multicore and multinode processing environment. As you know, heuristic compression is quite efficient, but it requires a lot computing resources. Its hard to estimate how much computing resources are required to do it done, but I think that you can make sense about you need for elastic computing cluster to do that. (My estimation is written below of this article.)

Adaptive computing is long providing TORQUE batch scheduler which make each single computer up as cluster computer, and provides user aggregated computing resources. You can find TORQUE can do for at http://www.adaptivecomputing.com/products/open-source/torque/ . AWS EC2 Spot Fleet is capable for taking several EC2 Spot Instance into elastic cluster. You may know that EC2 Spot Instance is provided as “spare” AWS’s computing capacity, so it is provided at discounted pricing. You can use relatively higher computing resource with reasonable cost. You can see about AWS EC2 Spot Fleet here http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/spot-fleet.html . I gonna mix them up.

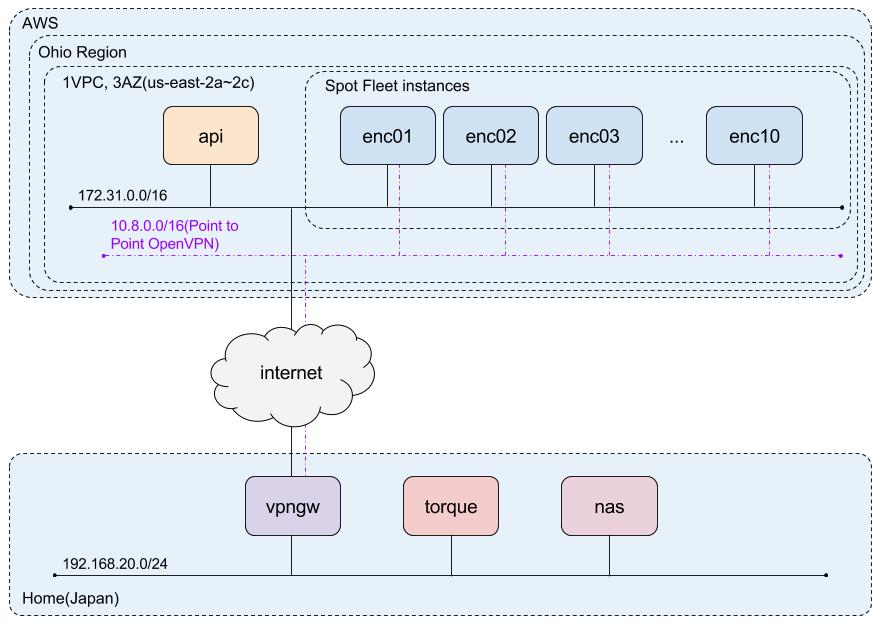

Here is a picture I’ve compiled.  Upper side show AWS. It consists from “API server” and “Encoding instances”. API Server acts as administrator of encoding servers and delivers certificate for each encoding instance in order to make VPN connection into my home. Lower side shows my home. TORQUE server and the data source is here. Nas provides source file to each encoding instance via VPN and receives compressed movie.

Upper side show AWS. It consists from “API server” and “Encoding instances”. API Server acts as administrator of encoding servers and delivers certificate for each encoding instance in order to make VPN connection into my home. Lower side shows my home. TORQUE server and the data source is here. Nas provides source file to each encoding instance via VPN and receives compressed movie.

When you’re going to take several instances properly configured, you have to communicate with each servers with proper manner, but your time is limited to develop actual code. You cannot choose complicated framework to do so. I believe that you want to make it easy about framework. Today, I think that REST API is most easy solution to communicate among each servers. REST uses http to communicate and you can call API with curl command. It’s super easy. On the server side, I use Flask( http://flask.pocoo.org/ ) to build API server. Flask is python library which can make api server so easy. You can write just 5 lines code and can work as http api server. I write two types of api server. 2. VPN certificate administrator I uses OpenVPN as VPS software. OpenVPN requires that each client should have unique certificate to make unique connection between server. So I have to manage which certificate is paid to instance, and which certificate has returned along with instance termination. 4. TORQUE computing node registrer TORQUE only has CLI interface to register/unregister incoming computing node for now. I’ve tweaked some REST api which receives http message from encoding instance and translate into CLI command to TORQUE server. I’ve successfully glued them up, and finally EC2 Spot instances starts working as a part of existing my home’s TORQUE cluster ! I’ve taken about 50 man hours at this point.

I select AWS Ohio region as a place to expand spot instances. The reason is that Ohio region offers most cheap price about 4-core instance. AWS almost alway offers more cheap price against “previous generation” instance. At the first, I’ve played at North Virginia region, but it doesn’t offers previous generation 4-core instances and the price has frequentry changed. So I understand that N.Virginia region is so crowded and I cannot run computing jobs over 24 hours at there. Ohio region offers stable previous generation 4-core instances with cheap price and bidding price is more stable than N.Virginia. So I decide to play around at Ohio region. I decided to pay $0.03/hour per instance. Then, I’ve configured Spot Fleet and launched just one instance. I want to measure how long my computing task takes in order to estimate my budget to complete mission. Here is my first job execution time detail. This is the result of encoding roughly 2 hours movie.

And Here is billing snapshot from AWS. * Data Transfer: $0.46 + $0.000 per GB - data transfer in per month: $0.00(45.294GB) + $0.000 per GB - first 1 GB of data transferred out per month: $0.00(1GB) + $0.090 per GB - first 10 TB / month data transfer out beyond the global free tier: $0.45(5.048GB)

Elastic Computing Cloud: $2.19

Total: $2.68

So I can say that AWS costs about $3.00 per 2 hours encodings. Next, I gonna examine how many movies I have to compress. Along with my rough examination, I realised that I have roughly 1500 movies which have about 840 hours. So I can calcurate like this.

When I going to encode all of movies in reasonable time window, I should prepare like this.

Hmm… 35 days encoding time(Case2) looks nice from my viewpoint. How much does it costs ? like this.

Damn. This cost is too huge to take for me.

Previous estimation is based on that encoding server have 4 vCPU(core). When I increased the number of vCPUs, I’ll not need a lot of servers. Ohaio Region also offers i2.8xlarge instance which have 32 vCPU and it can take 8 server’s task in 1 server. The price of i2.8xlarge is $0.83 per hour. Then, how much does it change costs ?

Unfortunatelly, large size instance seems not help me so much.

This trial shows possibilities for distributed computing cluster will help your piled-up recorded movies which sits in your storage. Unfortunately, heuristic compression on public cloud environment costs so much and your budget will not meet. I’ll try different approach and write about later. Stay tuned !

いろいろ初心者。勉強勉強。 わかったことをちらしの裏にメモ * 自作イメージのアップロードは死ぬほど遅い 5GBほどのイメージのアップロードに6時間ほどかかる。2Mbps弱くらいですか計算上。おせー。Appleとかのアップデートとか爆速で落ちてくるのでそれくらいできてほしい気が。おまけにアップロード中にtimeoutがガツガツ出るのでec2-upload-bundleには–retryオプション必須。これで数回死んだ。

ec2-bundle-imageが失敗する場合はディスクの空き容量を見ろ -dオプションで指定していなければ/tmpにできてしまうのでそこに空きがなければだめ。

ec2-registerが失敗する場合にはcertificateを作り直してリトライ

どうやらkernelとinitrdはAmazonほか謹製のものを使わなければいけない予感 予感とかなんだよって感じだけどどうやらそういうことらしい。つまり世の中でいろいろ書かれている自作AMI作成方法ってのはbios->kernel->initrd->のあとの、rootディスクの部分。init以降は自作できるよ、という風に理解しなければいけないのか?よくわかんね。

AWS Management ConsoleもElasticfoxもsshももっさりクオリティ なんかえれえもっさりって感じですがこんなもんなんだろうか?よくわかんね。

いまだに自作AMIでブートできてない。でも結構ハマる感覚が久しぶりで楽しいかも。お金もそんなにかかってないし。1週間使って$1程度だし。